Just as we humans call upon our senses to provide us with data about our environment, so smartphones and tablet devices use their own digital senses – touchscreen, geolocation, orientation, direction and motion – to provide interaction and to tailor applications and games to the user and their real-world surroundings. Adding external accessories will give a mobile device even more senses – these include: add-ons for health, such as measuring blood sugar levels or tracking blood pressure; add-ons for fitness, such as heart rate monitors and in-shoe sensors; and add-ons for small businesses, such as credit card readers, for accepting payments.

There are three main ways that developers can access the data reported by these device sensors:

- Using native operating system application programming interfaces (APIs) for each platform (i.e. Android, iOS, Microsoft) they wish to support.

- Using a framework such as PhoneGap, which enables developers to write their code once in HTML5 and recompile it into native apps for each operating system and device, interacting using native APIs.

- Sensor data can be accessed using standard APIs created through the W3C that work with different devices using JavaScript within mobile browsers such as Mobile Safari, Chrome, Opera Mobile and Firefox.

The advantage of the third, Web-based, approach is that it sidesteps the requirement to go through the app-store approval process each time the app is updated, or a bug fix is released. Nor do users have to manually update their apps (it can be done automatically). And it still allows functional and beautiful apps to be built. This is the approach that appeals to me most, and which I will cover in detail in this article.

I will discuss each sensor in turn and describe how to access its data through JavaScript, giving examples of real-world usage and offer some of my personal experience for getting the best results. Refer to the Mobile HTML5 Compatibility Tables for full details on which browsers and devices currently support accessing sensor data.

The geolocation sensor

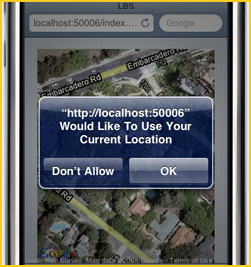

This sensor is the brains behind mobile mapping applications, locating the user’s position on Earth to help them plot routes to different destinations. The sensor uses a combination of approaches, which may include WiFi positioning, GSM cell tower triangulation and GPS satellite positioning to retrieve a latitude and longitude coordinate of the user’s location. To protect the user’s privacy, the Web app/site must request permission (as specified the W3C guidelines), before accessing data from this sensor. The user is presented with a dialogue asking them to permit access to their location (pictured right).

This sensor is the brains behind mobile mapping applications, locating the user’s position on Earth to help them plot routes to different destinations. The sensor uses a combination of approaches, which may include WiFi positioning, GSM cell tower triangulation and GPS satellite positioning to retrieve a latitude and longitude coordinate of the user’s location. To protect the user’s privacy, the Web app/site must request permission (as specified the W3C guidelines), before accessing data from this sensor. The user is presented with a dialogue asking them to permit access to their location (pictured right).

Using this geolocation data allows developers to improve user experience (UE) onsite by, for example, automatically pre-filling city and country fields in Web forms, or by looking up what films are playing, at what time, at cinemas in the locality. Using this data in conjunction with the Google Maps API means we can build dedicated mapping and routing apps with mapps that dynamically updating the user interface (UI) as the user changes location. Knowing the location also enables an app to show photos taken in the user’s vicinity using the Panoramio API or a training assistant to calculate how long it takes a runner to cover a certain distance, with the ability to compare performance with past and future runs.

The W3C Geolocation API allows us to access the user’s location coordinates through JavaScript; providing a one-off position lock, or the ability to continuously track the user as they move. The API also allows us to establish the accuracy of the returned coordinates and specify whether we want the location to be returned normally or with high precision. N.B. Precision location will both take a little longer to pinpoint the user and potentially consume more device battery power in the process. The following example shows how this API could be used to update a map on screen dynamically based on the user’s location, using Google’s Static Maps API for simplicity.

// Detect the API before using it

if (navigator.geolocation) {

// Get a reference to a <div id="map"> tag on the page to insert the map into

var mapElem = document.getElementById("map"),

// Define a function to execute once the user’s location has been established,

// plotting their latitude and longitude as a map tile image

successCallback = function(position) {

var lat = position.coords.latitude,

long = position.coords.longitude;

mapElem.innerHTML = '<img src="http://maps.googleapis.com/maps/api/staticmap?markers=' + lat + ',' + long + '&zoom=15&size=300x300&sensor=false" />';

},

// Define a function to execute if the user’s location couldn’t be established

errorCallback = function() {

alert("Sorry! I couldn’t get your location.");

};

// Start watching the user’s location, updating once per second (1s = 1000ms)

// and execute the appropriate callback function based on whether the user

// was successfully located or not

navigator.geolocation.watchPosition(successCallback, errorCallback, {

maximumAge: 1000

});

}

To prevent JavaScript errors, use feature detection to ensure that access to the geolocation sensor is available before coding against its API. It’s possible to request access to the user’s location on page load, but this is best avoided, as this forces them to choose to share their location before they know how it will be used. Providing a button for the user to press to give permission to access their location, gives them a greater sense of control over the app, which makes them much more likely to grant permission.

If the user denies access to their location, you may be able to locate their general position to city or country level using an IP-based fallback. If this is not suitable, explain politely to the user that you are unable to provide them with some specific functionality until they grant you permission, thus letting them feel as if they’re in control of your page or application.

Further reading

- Using Geolocation – Mozilla Developer Network.

- Exception Handling with the Geolocation API – Jef Claes.

- A Simple Trip Meter Using the Geolocation API – Michael Mahemoff, HTML5 Rocks.

The touch sensor

Touchscreens allow users control of the interface of their mobile devices in a simple and natural manner. The touch sensors underneath the screen can detect contact by one or more fingers and track their movement across the screen. This is a

touchevent

(all user interactions with Web pages/apps, e.g. taps, clicks, are called events).

The data from the touch sensor is accessed in JavaScript using the W3C Touch Events API. This enables the enhancement of Web sites/apps with carousels and slideshows that react to finger swipes. It also allows the development of advanced Web apps that allow people to draw pictures using their fingers (see Artistic Abode’s Web app) or to test their memory by flipping over cards with a finger to find the pairs (see the MemoryVitamins.mobi Web app).

Figure 1: Image shows: a picture being drawn using a finger on a touchscreen, using Artistic Abode’s Web app.

Whenever the user touches, moves, or removes a finger from the screen, a touch event fires in the browser. An event listener is assigned to each event, which runs a specific code routine whenever the user interacts with the screen. As well as giving us the location of the current touchpoint, the sensor can also tell us which page element was touched and provide a list of all other finger touches currently on screen, those within a specific element, and those which have changed since the last touch event fired.

Certain touch actions trigger behavior within the operating system: holding down a finger over an image might trigger a context menu to appear or two fingers moving over a page might trigger a page zoom. If you are coding for the touch sensor, you can override this default OS behavior within your event-handler function using the event.preventDefault().

The example below shows how this API can be used to display the current number of touches on the screen at any one time, updating when the users fingers are added or removed from the screen.

// Define an event handler to execute when a touch event occurs on the screen

var handleTouchEvent = function(e) {

// Get the list of all touches currently on the screen

var allTouches = e.touches,

allTouchesLength = allTouches.length,

// Get a reference to an element on the page to write the total number of touches

// currently on the screen into

touchCountElem = document.getElementById("touch-count");

// Prevent the default browser action from occurring when the user touches and

// holds their finger to the screen

if (e.type === 'touchstart') {

e.preventDefault();

}

// Write the number of current touches onto the page

touchCount.innerHTML = 'There are currently ' + allTouchesLength + ' touches on the screen.';

}

// Assign the event handler to execute when a finger touches ('touchstart') or is removed from ('touchend') the screen

window.addEventListener('touchstart', handleTouchEvent, false);

window.addEventListener('touchend', handleTouchEvent, false);

Apple iOS devices support a more advanced set of JavaScript events relating to gestures. These fire when the user pinches or rotates two or more fingers on the screen and reports back how far the figures moved. These are device-specific – so if you wish to replicate these events on different devices, you might find Hammer.js useful, it is a JavaScript library specializing in touch gestures.

Further reading

- Developing for Multi-Touch Web Browsers – Boris Smus, HTML5 Rocks.

- Touch Events – Mozilla Developer Network

- Handling Gesture Events – Apple Safari Web content guide.

The orientation and direction sensors

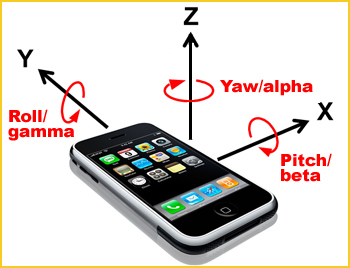

The orientation sensor establishes which way up the device is being held; it can also detect how the device is being positioned about three different rotational axes – assuming the device has an internal gyroscope. Some devices (such as the Apple iPhone) also include a magnetometer, which helps us to establish the precise direction the device is pointing in. The rotation around the X, Y, Z axes may respectively be referred to as roll, pitch and yaw as in planes and boats or in terms of degrees of beta, gamma and alpha rotation.

Figure 1: Image shows: Image shows: rotation around the X, Y, Z, axes of a mobile phone. Source: http://hillcrestlabs.com

By knowing the device orientation, we can adjust features of our sites to suit, such as repositioning a navigation menu above or beside the main content area, as appropriate. The W3C Screen Orientation API in JavaScript informs us of the device’s current orientation, whether portrait or landscape, as well as whether it is being held upside down or not. It fires an event that we can hook into at the very moment the device is re-orientated. If you only wish to change visible page styles when the device is re-orientated, consider using CSS Media Queries to achieve this rather than JavaScript, as this will provide the correct separation of concerns. The following example shows how to use this API to add a CSS class to your page tag to indicate whether the device is in portrait or landscape orientation and to allow appropriate styling changes to be made.

// Define an event handler function to execute when the device orientation changes

var onOrientationChange = function() {

// The device is in portrait orientation if the device is held at 0 or 180 degrees

// The device is in landscape orientation if the device is at 90 or -90 degrees

var isPortrait = window.orientation % 180 === 0;

// Set the class of the <body> tag according to the orientation of the device

document.body.className = isPortrait ? 'portrait' : 'landscape';

}

// Execute the event handler function when the browser tells us the device has

// changed orientation

window.addEventListener('orientationchange', onOrientationChange, false);

// Execute the same function on page load to set the initial <body> class

onOrientationChange();

Access to a built-in gyroscope allows us to create mobile versions of games like Jenga or Marble Madness to test the user’s steadiness and nerves. When rotating a mobile device containing a gyroscope, the browser fires a reorientation event according to the W3C deviceorientation API; data supplied with this event represents the amount the device rotated around its three axes, measured in degrees. Feeding this motion data back to our app allows us to update the display according to our game logic. The example below shows how to use the built-in gyroscope and deviceorientation API to rotate an image on a page in 3D according to the orientation of the device.

// Get a reference to the first <img> element on the page

var imageElem = document.getElementsByTagName('img')[0],

// Create an event handler function for processing the device orientation event

handleOrientationEvent = function(e) {

// Get the orientation of the device in 3 axes, known as alpha, beta, and gamma,

// represented in degrees from the initial orientation of the device on load

var alpha = e.alpha,

beta = e.beta,

gamma = e.gamma;

// Rotate the <img> element in 3 axes according to the device’s orientation

imageElem.style.webkitTransform = 'rotateZ(' + alpha + 'deg) rotateX(' + beta + 'deg) rotateY(' + gamma + 'deg)';

};

// Listen for changes to the device orientation using the gyroscope and fire the event

// handler accordingly

window.addEventListener('deviceorientation', handleOrientationEvent, false);

We could combine data from a magnetometer with a CSS rotation transform to construct a virtual compass or to align an on-screen map to the direction in which the user is facing. An experimental Webkit-specific property is available in the Apple Mobile Safari browser, returning the current compass heading in degrees from due north whenever the device is moved, allowing us to update the display accordingly. There is no standardized API for accessing the magnetometer at present, although this is envisioned as an extension to the Device Orientation API already mentioned. The example below shows how to rotate an

// Get a reference to the first <img> element on the page

var imageElem = document.getElementsByTagName('img')[0],

// Create a function to execute when the compass heading of the device changes

handleCompassEvent = function(e) {

// Get the current compass heading of the device, in degrees from due north

var compassHeading = e.webkitCompassHeading;

// Rotate an image according to the compass heading value. The arrow pointing

// to due north in the image will continue to point north as the device moves

imageElem.style.webkitTransform = 'rotate(' + (-compassHeading) + 'deg)';

};

// Observe the orientation of the device and call the event handler when it changes

window.addEventListener('deviceorientation', handleCompassEvent, false);

Further reading

- Detecting Device Orientation – Mozilla Developer Network

The motion sensor

The motion sensor tells us how fast our mobile device is being moved by the user in any of its three linear axes, X (side-to-side), Y (forward/back), Z (up/down) and, for those devices with a gyroscope, the speed at which it moves around its three rotational axes, X (degrees of beta rotation), Y (gamma), Z (alpha).

The motion sensor is used in flip-to-silence apps and driving games. The motion sensor opens up all sorts of possibilities from enabling users to reset a form or undo an action with a shake of their device to advanced Web apps, such as this virtual seismograph.

The W3C DeviceMotionEvent JavaScript API fires a JavaScript event whenever a smartphone or tablet is moved or rotated, this passes on sensor data giving device acceleration (in meters per second squared) and rotation speed (in degrees per second). Acceleration data is given in two forms: one taking into account the effect of gravity and one ignoring it. In the latter case, the device will report a downward acceleration of 9.81 meters per second squared even while perfectly still. The example below shows how to report the current acceleration of the device to the user using the DeviceMotionEvent API.

// Reference page elements for dropping current device acceleration values into

var accElem = document.getElementById('acceleration'),

accGravityElem = document.getElementById('acceleration-gravity'),

// Define an event handler function for processing the device’s acceleration values

handleDeviceMotionEvent = function(e) {

// Get the current acceleration values in 3 axes and find the greatest of these

var acc = e.acceleration,

maxAcc = Math.max(acc.x, acc.y, acc.z),

// Get the acceleration values including gravity and find the greatest of these

accGravity = e.accelerationIncludingGravity,

maxAccGravity = Math.max(accGravity.x, accGravity.y, accGravity.z);

// Output to the user the greatest current acceleration value in any axis, as

// well as the greatest value in any axis including the effect of gravity

accElem.innerHTML = 'Current acceleration: ' + maxAcc + 'm/s^2';

accGravityElem.innerHTML = 'Value incl. gravity: ' + maxAccGravity + 'm/s^2';

};

// Assign the event handler function to execute when the device is moving

window.addEventListener('devicemotion', handleDeviceMotionEvent, false);

Further reading

- Device Motion Event Class Reference – Apple Safari Developer Library.

- Orientation and Motion Data Explained – Mozilla Developer Network.

The missing sensors

At the time of writing (September 2012) neither camera nor microphone sensors are accessible through JavaScript within a mobile browser. If we were able to access these sensors, we could (for example) capture an image of the user’s face to assign to an online account or allowed the user to record audio notes for themselves.

Disagreements between different browser vendors are in part to blame for the lack of a standardized API for access to this data. The new W3C Media Capture and Streams API is gaining traction, however, and in time should enable developers to capture a still image or video stream from the camera or audio stream from the microphone (with the user’s permission). At present, this API is only available in Google’s Chrome browser on the desktop.

Further reading

- Sketchbots: Camera Access Demo – Google Chrome Web Lab

Handling rapid fire events in JavaScript

Sensor data can change quickly – as a finger moves across the touchscreen or as the device is moved or reoriented – thus any associated JavaScript events will fire rapidly, often a few milliseconds apart. With any processor-intensive calculations or comparatively slow Document Object Model (DOM) manipulations executed directly from these events, there’s a risk that they will fail to complete before the next event fires. This overlap will consume additional extra memory on the device and cause the Web app to feel unresponsive.

For these types of event, consider using the event-handler function to do no more than save current values from the sensor data into variables. Then move your calculations or DOM manipulations into a new function: reading out the sensor values stored in those variables, executing that function on a fixed timer using setInterval or setTimeout, and ensuring it executes less frequently than the rapid fire event it replaces. The code below shows how we might adjust the earlier gyroscope example to apply this technique.

// Create variables to store the data returned by the device orientation event

var alpha = 0,

beta = 0,

gamma = 0,

imageElem = document.getElementsByTagName('img')[0],

// Update the event handler to do nothing more than store the values from the event

handleOrientationEvent = function(e) {

alpha = e.alpha,

beta = e.beta,

gamma = e.gamma;

},

// Add a new function to perform just the image rotation using the stored variables

rotateImage = function() {

imageElem.style.webkitTransform = 'rotateZ(' + alpha + 'deg) rotateX(' + beta + 'deg) rotateY(' + gamma + 'deg)';

};

// Connect the event to the handler function as normal

window.addEventListener('deviceorientation', handleOrientationEvent, false);

// Execute the new image rotation function once every 500 milliseconds, instead of

// every time the event fires, effectively improving application performance

window.setInterval(rotateImage, 500);

Taking it further

The possibilities and opportunities for enhancing sites and creating Web apps based on sensor data using JavaScript is huge. I have considered a few examples here – and with a little creativity, I’m sure you can come up with many more. Try combining data from different sensors (such as geolocation and direction or motion and orientation) to help you build enhanced sites and Web apps that respond to the user and their environment in new and exciting ways. Experiment and have fun!

Main image: Motherboard Fingerprint © Seamartini Studio, via www.shutterstock.com

.

Leave a Reply