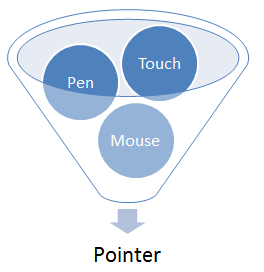

The Pointer Events API is an HTML5 specification that combines touch, mouse, pen and other inputs into a single unified API. It is less well supported than the Touch Events API, although support is growing, with all the major browsers working on an implementation, except for Apple’s Safari. There’s a colorful background to how the current state of browser support for this API came to be which we covered previously on mobiForge, but in this article we’ll just look at its usage.

The simple idea behind it, is that mouse and touch and stylus input events are unified into a single event API.

Pointer Events

While the Touch Events API was defined in terms of Touches, the Pointer Events API is defined in terms of Pointers, where a Pointer is defined as:

a hardware agnostic representation of input devices that can target a specific coordinate (or set of coordinates) on a screen

PointerEvent inherits and extends MouseEvent, so it has all the usual properties that MouseEvent has, such as clientX, clientY etc., as well as a few additional ones, usch as tiltX, tiltY, and pressure. The pointer attributes we are most interested in are:

| Attribute | Description |

|---|---|

pointerId |

unique numeric identifier |

screenX |

horizontal coordinate relative to screen |

screenY |

vertical coordinate relative to screen |

clientX |

horizontal coordinate of point relative to viewport, excluding scroll offset |

clientY |

vertical coordinate of point relative to viewport, excluding scroll offset |

pageX |

horizontal coordinate of point relative to page, including scroll offset |

pageY |

vertical coordinate of point relative to page, including scroll offset |

width |

width of pointer contact on screen |

height |

height of pointer contact on screen |

tiltX |

angle of tilt of stylus between Z and X axes, where X and Y plane is on the surface of the screen |

tiltY |

angle of tilt of stylus between Z and Y axes, where X and Y plane is on the surface of the screen |

pressure |

pressure of contact on screen |

pointerType |

class of Pointer: mouse, pen, or touch |

isPrimary |

is this the main Pointer for a pointer type |

There are a few interesting things to note here:

pointerId: so we can associate an event with a particularPointer. Yes, multi-touch is a go!width/height: whereas mouse events are only associated with a single point, a pointer is a bigger beast that may cover an area.isPrimary: when multiple pointers (i.e multi-touch) are detected, this property represents whether this pointer is the master pointer of the current active pointers for each pointer type. Only a primary pointer will produce compatibility mouse events. More on this later.pressure/tilt/width/height: these properties open up the types of interactions possible above and beyond basic touch support

The main event types defined by the PointerEvent interface are:

| event type | fired when… |

|---|---|

pointerover |

pointer moves over an element (enters its hit test boundaries) |

pointerenter |

pointer moves over an element or one of its descendants. Differs to pointerover in that it doesn’t bubble |

pointerdown |

active buttons state is entered: for touch and stylus, this is when contact is made with screen; for mouse, when a button is pressed |

pointermove |

pointer changes coordinates, or when pressure, tilt, or button changes fire no other event |

pointerup |

active buttons state is left: i.e. stylus or finger leaves the screen, or mouse button released |

pointercancel |

pointer is determined to have ended, e.g. in case of orientation change, accidental input e.g. palm rejection, or too many pointers |

pointerout |

pointer moves out of an element (leaves its hit test boundaries). Also fired after pointerup event for no-hover supported devices, and after pointercancel event |

pointerleave |

pointer moves out of an element and its descendants |

gotpointercapture |

when an element becomes target of pointer |

lostpointercapture |

when element loses pointer capture |

Mouse events, pointer events, and touch events equivalence

For comparison, the following table shows the corresponding events from each of these input related APIs

| Mouse event | Touch event | Pointer event |

|---|---|---|

mousedown |

touchstart |

pointerdown |

mouseenter |

pointerenter |

|

mouseleave |

pointerleave |

|

mousemove |

touchmove |

pointermove |

mouseout |

pointerout |

|

mouseover |

pointerover |

|

mouseup |

touchend |

pointerup |

It’s easier to draw an equivalance with pointer events and mouse events, than with touch events, as can be seen in the table. However, although there is a rough equivalence between the mouse, pointer and touch API events, they’re not the same. This means that you shouldn’t use the same event handling code for events from the different APIs, unless you know what you are doing, as the events do not play out the same way. For example, the target element for touchmove is the element in which the touch began, whereas for mousemove and pointermove the target is the element currently under the mouse or pointer.

Mouse Compatability Events

A strength of this API is its compatibility with mouse events, so that sites built with mouse events will still work. This is intentional. To achieve this, the API fires mouse events after the pointer events have been fired for a pointer. This only happens for the primary pointer of each pointer type, and not every pointer for each type. This makes sense, when you consider that there can only be a single mouse input at a time. By firing mouse events for the primary input, websites built with mouse events will still function for touch inputs. This will make transitioning to pointer events much easier.

Benefits of this API

The Pointer Events API specifies a way to handle mouse, touch and pen inputs at the same time, without having to code for separate sets of events, mouse events, and touch events.

Websites and apps today can be built to be consumed on desktop, mobile and tablet devices. While mouse input is found mostly on desktop computers, touch and pen input is found across all of these types of device. Traditionally a developer has to write extra code to respond to events from each of the different input types. Or use a polyfill to handle all the events. Pointer Events changes this. Some of the benefits include:

- unified mouse and touch events mean that separate event listeners are not needed for each

- no need for separate code to get xy coordinates for mouse, touch or pen input

- supports pressure, width and height, and tilt angles where supported by hardware

- can distinguish between input types if and when you need to

The advantages are summarised in the table below (source: IE blog):

| Mouse Events | Touch Events | Pointer Events | |

| Supports mouse | Y | P | Y |

| Supports single-touch | P | Y | Y |

| Supports multi-touch | N | Y | Y |

| Supports pen, Kinect, and other devices | P | N | Y |

| Provides over/out/enter/leave events and hover | Y | N | Y |

| Asynchronous panning/zooming initiation for HW acceleration | N | N | Y |

| W3C specification | Y | Y | Y |

Pointer Events Examples

In this article we cover just the basics. The very first thing we can do is check for support for the touch events API:

if (window.PointerEvent) {

// Pointer events are supported.

}

We’ll recreate some of the examples we used in our Touch Events API article, but this time with Pointer Events.

For our first example we’ll capture pointer input and display the coordinates of the pointer in the browser. So, first define a div to capture pointer events, and another div where we’ll display the screen coordinates of the most recent pointer.

<div id="coords"></div> <div id="pointerzone"></div>

Next a little JavaScript to add an event listener to our pointer area, and attach a handler function, called pointerHandler.

function init() {

// Get a reference to our pointer div

var pointerzone = document.getElementById("pointerzone");

// Add an event handler for the pointerdown event

pointerzone.addEventListener("pointerdown", pointerHandler, false);

}

In the pointerHandler function we can grab the coordinates of the pointer event:

function pointerHandler(event) {

// Get a reference to our coordinates div

var coords = document.getElementById("coords");

// Write the coordinates of the pointer to the div

coords.innerHTML = 'x: ' + event.pageX + ', y: ' + event.pageY;

}

And we make sure that the init function is called after the page has loaded with:

<body onload="init()"> ... </body>

And now we’re ready to go. If your device supports pointer events, you should be able to see the numeric screen coordinates of your touches below (even if it doesn’t we’ve added a fallback for mouse input).

Note: the examples in this article implement fallback for mouse events, but not for touch events. If you want to see touch events working, take a look at our article on the Touch Events API which has working examples. Otherwise, if you are on a device that the examples are not working on, you can either check it out on a desktop computer, and Windows Phone device, or come back again in a few months when Pointer Events should supported on your device!

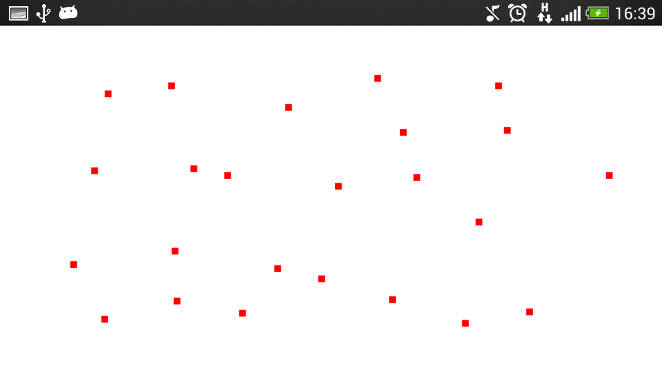

Display Pointer Events on screen

Next, we’ll build on this, and instead of displaying the numeric coordinates, we’ll display a dot at the pointer’s coordinates. To do this we’ll make use of the HTML5 canvas element. The main difference from the previous example is in the usage of the pointer coordinates. In this example, we define a function draw which draws a rectangle on the canvas with the following code:

var canvas = document.getElementById("mycanvas"),

context = canvas.getContext("2d");

context.fillRect(event.pageX-offset, event.pageY-offset, 5, 5);

There is also a new function getOffset which is applied to the pointer’s coordinates (this is explained below).

The full code is listed below:

<html>

<head>

<style>

/* Disable intrinsic user agent touch behaviors (such as panning or zooming) */

canvas {

touch-action: none;

}

</style>

<script type='text/javascript'>

function init() {

var canvas = document.getElementById("mycanvas"),

context = canvas.getContext("2d");

var offset = getOffset(canvas);

if(window.PointerEvent) {

canvas.addEventListener("pointerdown", draw, false);

}

else {

//Provide fallback for user agents that do not support Pointer Events

canvas.addEventListener("mousedown", draw, false);

}

}

// Event handler called for each pointerdown event:

function draw(event) {

var canvas = document.getElementById("mycanvas"),

context = canvas.getContext("2d");

context.fillRect(event.pageX-offset, event.pageY-offset, 5, 5);

}

//Helper function to get correct page offset for the Pointer coords

function getOffset(obj) {

var offsetLeft = 0;

var offsetTop = 0;

do {

if (!isNaN(obj.offsetLeft)) {

offsetLeft += obj.offsetLeft;

}

if (!isNaN(obj.offsetTop)) {

offsetTop += obj.offsetTop;

}

} while(obj = obj.offsetParent );

return {left: offsetLeft, top: offsetTop};

}

</script>

</head>

<body onload="init()">

<canvas id="mycanvas" width="500" height="500" style="border:1px solid black;"></canvas>

</body>

</html>

Display pointer position in browser—Live Demo

Activate the live demo by touching the image below, then touch the target area below, and a dot will be displayed at the position of each pointer.

Converting pointer event coordinates to screen coordinates

It may be necessary to convert between pointer coordinates and screen coordinates by applying an offset, if there are other elements on your webpage. We covered the details of this conversion in our touch events article, so you can read that if you want the background, but this is the function we use to compute the offset:

function getOffset(obj) {

var offsetLeft = 0;

var offsetTop = 0;

do {

if (!isNaN(obj.offsetLeft)) {

offsetLeft += obj.offsetLeft;

}

if (!isNaN(obj.offsetTop)) {

offsetTop += obj.offsetTop;

}

} while(obj = obj.offsetParent );

return {left: offsetLeft, top: offsetTop};

}

Pointer events gestures: the pointermove event

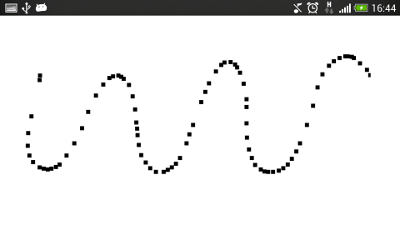

The previous examples have focussed on single pointer touches: a single tap with stylus or finger, or single mouse click, which we captured with the pointerdown event. But pointer events support far richer interaction than single taps and clicks. Just as we used the touchmove event from the touch events API, we can use the equivalent pointer event, pointermove, to capture swipes and gestures in the pointer events API.

We want the next example to work like this: when we detect a pointerdown event, then we want to start tracing the pointer’s coordinates. So, to achieve this, we listen for the pointerdown event. When this is detected we then attach a listener for the pointermove event. And when the pointermove event is detected, we can draw at this point.

canvas.addEventListener("pointerdown", function() {

canvas.addEventListener("mousemove", drawpointermove, false);

}

, false);

So every time the pointermove event occurs, we draw a dot, so we should be able to trace a line over the pointer path, and draw shapes. But as we saw with our touch events example, this only works up to a point. The event handler still only draws a dot at the pointer event coordinates, so if your pointer moves too fast it draws a series of dots, rather than a line:

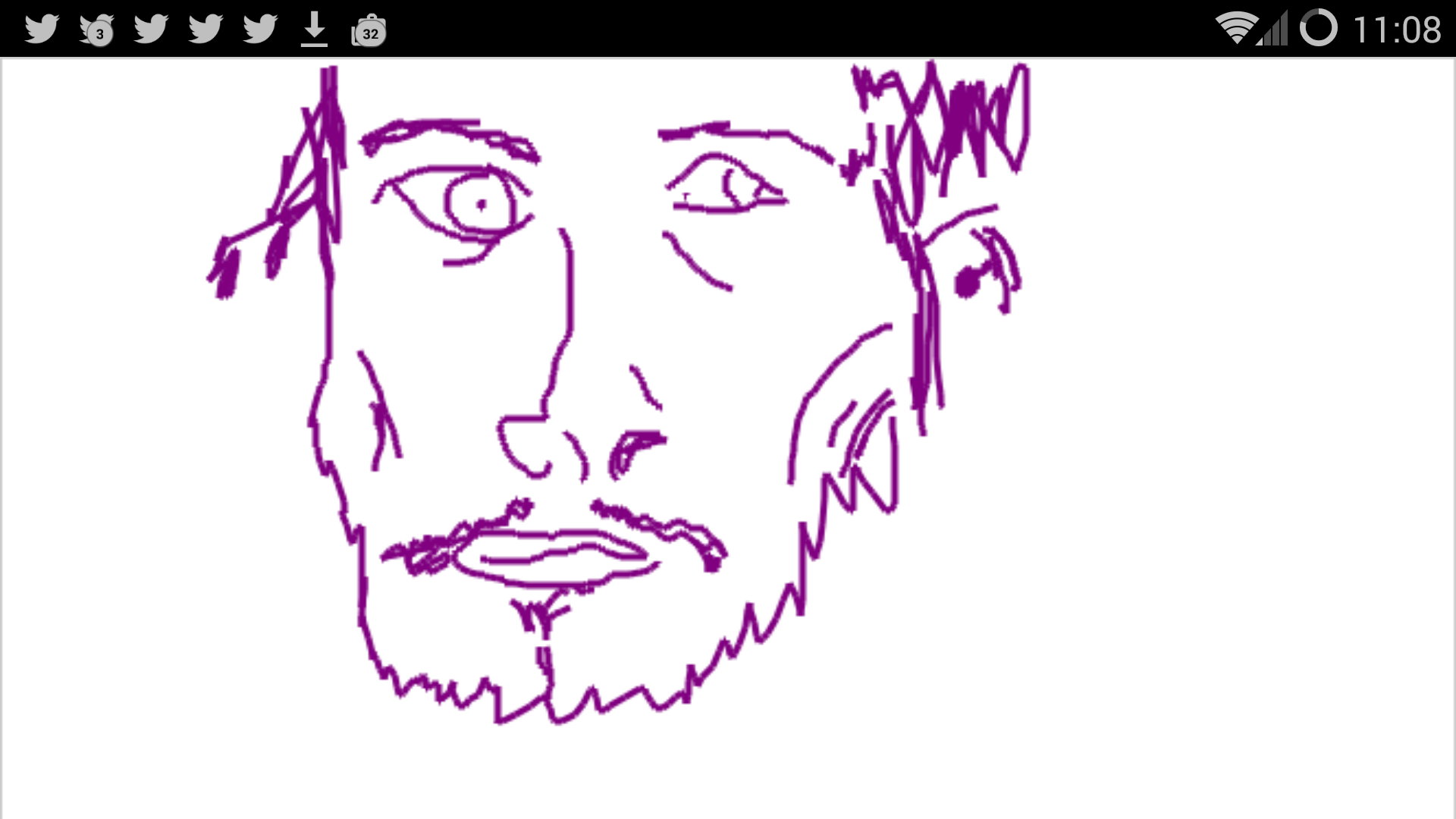

So instead we draw a line between each successive pointer position, to ensure they are joined up:

function draw(e) {

ctx.beginPath();

// Start at previous point

ctx.moveTo(lastPt.x, lastPt.y);

// Line to latest point

ctx.lineTo(e.pageX, e.pageY);

// Draw it!

ctx.stroke();

//Store latest pointer

lastPt = {x:e.pageX, y:e.pageY};

}

When the pointer path is finished—when the user removes his finger or stylus, or stops pressing the mouse button—then we want to stop drawing on the canvas. So we listen for the pointerup event, and call a function endPointer when it’s triggered:

canvas.addEventListener("pointerup", endPointer, false);

function endPointer(e) {

//Stop tracking the pointermove event

canvas.removeEventListener("pointermove", drawpointermove, false);

//Set last point to null to end our pointer path

lastPt = null;

}

Pointer Events gesture example

Activate the pointermove example by clicking or touching the image of Ryan Gosling below, and point your way to your own masterpiece.

The full code listing is given below:

<html>

<head>

<style>

/* Disable intrinsic user agent touch behaviors (such as panning or zooming) */

canvas {

touch-action: none;

}

</style>

<script type='text/javascript'>

var lastPt = null;

var canvas;

var ctx;

function init() {

canvas = document.getElementById("mycanvas");

ctx = canvas.getContext("2d");

var offset = getOffset(canvas);

if(window.PointerEvent) {

canvas.addEventListener("pointerdown", function() {

canvas.addEventListener("pointermove", draw, false);

}

, false);

canvas.addEventListener("pointerup", endPointer, false);

}

else {

//Provide fallback for user agents that do not support Pointer Events

canvas.addEventListener("mousedown", function() {

canvas.addEventListener("mousemove", draw, false);

}

, false);

canvas.addEventListener("mouseup", endPointer, false);

}

}

// Event handler called for each pointerdown event:

function draw(e) {

if(lastPt!=null) {

ctx.beginPath();

// Start at previous point

ctx.moveTo(lastPt.x, lastPt.y);

// Line to latest point

ctx.lineTo(e.pageX, e.pageY);

// Draw it!

ctx.stroke();

}

//Store latest pointer

lastPt = {x:e.pageX, y:e.pageY};

}

function getOffset(obj) {

//...

}

function endPointer(e) {

//Stop tracking the pointermove (and mousemove) events

canvas.removeEventListener("pointermove", draw, false);

canvas.removeEventListener("mousemove", draw, false);

//Set last point to null to end our pointer path

lastPt = null;

}

</script>

</head>

<body onload="init()">

<canvas id="mycanvas" width="500" height="500" style="border:1px solid black;"></canvas>

</body>

</html>

Multi-touch pointer events

What touch-friendly input API would be complete without multi-touch capabilities? So, let’s see how to do it—it’s a little easier to implement than for touch events.

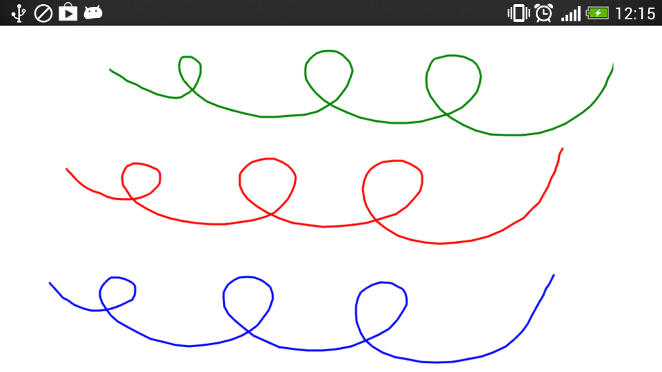

In this example we’ll just extend the previous pointermove example to work with multiple concurrent touches. To do this, we’ll assign different colours so that we can keep track of the traces of different pointers.

var colours = ['red', 'green', 'blue', 'yellow','black'];

and

//Key the colour based on the id of the Pointer multitouchctx.strokeStyle = colours[id%5]; multitouchctx.lineWidth = 3;

As with the last example, we need to draw lines between each of the pointer positions. Since we are tracing multiple pointers, then we’ll need to keep track of the last point of each of the active pointers, instead of just a single pointer in the last example. So we’ll use an associative array of last points keyed by the pointerId property of the pointers. We define our associate array as an Object, and add associative values as follows:

// This will be our associative array

var multiLastPt=new Object();

...

// Get the id of the pointer associated with the event

var id = e.pointerId;

...

// Store coords

multiLastPt[id] = {x:e.pageX, y:e.pageY};

The rest of the code is the same, except, now when we end a pointer trace, we also want to remove it from the array of last points with:

delete multiLastPt[id];

That’s it!

Pointer Events multi-touch example

Full code listing below:

<!DOCTYPE html>

<html>

<head>

<title>HTML5 multi-touch</title>

<style>

canvas {

touch-action: none;

}

</style>

<script>

var canvas;

var ctx;

var lastPt = new Object();

var colours = ['red', 'green', 'blue', 'yellow', 'black'];

function init() {

canvas = document.getElementById('mycanvas');

ctx = canvas.getContext("2d");

if(window.PointerEvent) {

canvas.addEventListener("pointerdown", function() {

canvas.addEventListener("pointermove", draw, false);

}

, false);

canvas.addEventListener("pointerup", endPointer, false);

}

else {

//Provide fallback for user agents that do not support Pointer Events

canvas.addEventListener("mousedown", function() {

canvas.addEventListener("mousemove", draw, false);

}

, false);

canvas.addEventListener("mouseup", endPointer, false);

}

}

function draw(e) {

var id = e.pointerId;

if(lastPt[id]) {

ctx.beginPath();

ctx.moveTo(lastPt[id].x, lastPt[id].y);

ctx.lineTo(e.pageX, e.pageY);

ctx.strokeStyle = colours[id%5];

ctx.stroke();

}

// Store last point

lastPt[id] = {x:e.pageX, y:e.pageY};

}

function endPointer(e) {

var id = e.pointerId;

canvas.removeEventListener("mousemove", draw, false);

// Terminate this touch

delete lastPt[id];

}

</script>

</head>

<body onload="init()">

<canvas id="mycanvas" width="500" height="500">

Canvas element not supported.

</canvas>

</body>

</html>

Browser support

We’ve previously covered the interesting and coloured history behind browser support for the Pointer Events API, so we won’t repeat it here. Currently, only IE supports the Pointer Events API. However, all the other major browsers, with the exception of Apple’s Safari, have committed to implementing the API, and work is in progress. In the table below, orange indicates work in progress.

| Android | iOS | IE Mobile | Opera Mobile | Opera Classic | Opera Mini | Firefox Mobile | Chrome for Android | |

|---|---|---|---|---|---|---|---|---|

| Pointer events | ||||||||

| (Sources: caniuse.com, DeviceAtlas, mobilehtml5.org) | ||||||||

Conclusion

In this article we took a look at the HTML5 Pointer Events API. We noted how it is less well supported than the Touch Events API, but that its support is growing with all the major browser manufacturers except for Apple/Safari committed to implementing it. It offers advantages over Touch Events in its unified approach to touch, mouse and pen inputs and promises to make life easier for developers by relieving them from responsibility of taking care of some of the not-so-interesting details of supporting various different input types in their webapps.

Image: W3C

Leave a Reply