In this article we explore some touch-friendly drag and drop implementations. In particular we’ll be looking at DOM and canvas-based drag and drop approaches. We’ll also build on some of the things we learned in previous HTML5 articles on mobiForge.

But first, a clarification about what this article is about. There is an official HTML5 drag and drop API in the works. This is not the focus of this article. The main justification for omitting this is that there is very poor support for the current draft of the specification across mobile browsers. Thus anything we cover would be largely irrelevant to our discussion. And even without this API, there are plenty of ways that drag and drop can be implemented, as we will see.

It’s worth pointing out that, issues aside, the HTML5 drag and drop API goes beyond what we want to achieve with drag and drop in this article. For example, it supports things like dragging objects between applications e.g. dragging an image from a native desktop and dropping on a webpage. See the drag and drop API draft for more information on the specfication. Interested readers might also be curious about criticisms levelled at same.

In this article, we will limit our scope to the dragging and dropping of DOM and canvas objects, and the reordering of DOM nodes.

A simple drag and drop demo

We’ll start with a simple DOM-based drag and drop demo, which will make use of the HTML5 touch events API, specifically the touchmove event. We won’t use the canvas element in this first example.

First we add an element to our page – this is the element we are going to drag:

<div id="draggable" ></div>

We also include a small bit of CSS to style it as a red square. Importantly, we add the position:absolute property

#draggable {width:50px;height:50px;background-color:#f00;position:absolute}

Now for some JavaScript. We’ll make move of the touchmove event that we learned about in our previous HTML5 Touch Events article. Once we capture the touch event, we will know its x and y coordinates, touch.pageX, and touch.pageY. Our approach to drag and drop here will be to simply update the position of our draggable element with the values of touch.pageX, and touch.pageY, by setting the CSS properties in JavaScript. So, the code will look like this:

var draggable = document.getElementById('draggable');

draggable.addEventListener('touchmove', function(event) {

var touch = event.targetTouches[0];

// Place element where the finger is

draggable.style.left = touch.pageX-25 + 'px';

draggable.style.top = touch.pageY-25 + 'px';

event.preventDefault();

}, false);

Try it out below:

This example made use of the Touch Events API, which we covered in more detail in this article. There is also another new(ish) API on the block: the Pointer Events API, with which it is also possible to implement drag and drop in your webpages. (We covered the the Pointer Events API here).

For comparison, the javascript to implement this example using the Pointer Events API is given below:

var draggable = document.getElementById('draggable');

draggable.addEventListener('touchmove', function(event) {

// Place element where the finger is

draggable.style.left = event.pageX-25 + 'px';

draggable.style.top = event.pageY-25 + 'px';

}, false);

Multi-touch drag and drop

If we had a number of elements that we wanted to make draggable we could apply a common CSS class to each draggable element, and in a similar way attach the touchmove event listener to each object of this class. In the HTML we add three divs:

<div class="draggable" id="d1" ></div> <div class="draggable" id="d2" ></div> <div class="draggable" id="d3" ></div>

with a small bit of CSS for colours, and the initial positioning:

.draggable {width:50px;height:50px;position:absolute}

#d1 {background-color:#f00;}

#d2 {background-color:#0f0;left: 100px;}

#d3 {background-color:#00f;left: 200px;}

And finally we need to modify the original javascript to add the listener to each of the draggable elements:

var nodeList = document.getElementsByClassName('draggable');

for(var i=0;i<nodeList.length;i++) {

var obj = nodeList[i];

obj.addEventListener('touchmove', function(event) {

var touch = event.targetTouches[0];

// Place element where the finger is

event.target.style.left = touch.pageX + 'px';

event.target.style.top = touch.pageY + 'px';

event.preventDefault();

}, false);

}

Multi-touch drag and drop live example

Drag the squares!

What’s this useful for?

Using this approach, we’re basically dragging elements around the page, and positioning them via CSS. The DOM structure remains unchanged. So this is neat, but what’s it good for? Well, if we were inclined, we could also change the DOM structure based on where the drop occured, instead of only modifying CSS properties. This might be useful for dropping objects onto targets, perhaps in a game or some other application, or it might be useful for reordering items in a list. In either case we will need some kind of touch/target collision detection to determine if we dropped the item in the correct place.

Canvas drag and drop

If your application has a graphical emphasis, then the choice to use canvas is probably a sensible one. While graphical applications are possible with DOM based approaches, as more graphical elements are added, the DOM can become cluttered and performance can be affected. Since canvas is simply rendered as a bitmap, it does not have the same overhead. This comes at a price however. Since there are no actual elements, just the canvas bitmap, there is no event handling API, so this must be managed by the application.

Having said this, we can drag an element around on canvas much as we can drag an element around on the page, but we have a little extra work to do because of the lack of an event handling API. Drag and drop on a DOM element is easier, since we can detect the element in which the event occurred.

With canvas, we will know the coordinates where a touch started or ended, but we will need to determine what objects on the canvas might have been potential targets of the interaction. A simple approach would be to maintain a list of objects, and their bounding boxes, and for each touch event, to match against this list, to find what object has been interacted with, and to make it the target of the touch. This is the approach we take in the following example:

Simple Canvas drag and drop example

In this example, we show how to implement a simple drag and drop on the canvas element. First we start with the canvas element.

<canvas id="dndcanvas"> Sorry, canvas not supported </canvas>

Next, to implement drag and drop, we’re going to maintain a list of draggable objects simply by recording their x and y positions. We’ll use the touchmove event as before, and update the list as necessary whenever this event is detected. We show how to do this with one object first, and then we’ll look at multiple objects where things get a little trickier.

First, in our init function we’ll define the initial position of our draggable object, along with its width and height. We will also attach an event handler function to our draggable object, for the touchmove event. When this event occurs, we’ll call a touch-detection function to determine if the touch was close enough to our object. If it is, then we’ll update the object with the coordinate of the touch, and redraw the canvas.

function init() {

// Initialise our object

obj = {x:50, y:50, w:70, h:70};

canvas = document.getElementById("dndcanvas");

canvas.width = window.innerWidth;

canvas.height = window.innerHeight;

// Add eventlistener to canvas

canvas.addEventListener('touchmove', function() {

//Assume only one touch/only process one touch even if there's more

var touch = event.targetTouches[0];

// Is touch close enough to our object?

if(detectHit(obj.x, obj.y, touch.pageX, touch.pageY, obj.w, obj.h)) {

// Assign new coordinates to our object

obj.x = touch.pageX;

obj.y = touch.pageY;

// Redraw the canvas

draw();

}

event.preventDefault();

}, false);

draw();

}

Now, we need to define our touch detection function. It simply takes two points and returns the value true if they are within an acceptable distance of each other. We could come up with more complex detection based on the shape of the bounding box for instance, but this will do for now.

function detectHit(x1,y1,x2,y2,w,h) {

//Very simple detection here

if(x2-x1>w) return false;

if(y2-y1>h) return false;

return true;

}

Finally, our draw function:

function draw() {

canvas = document.getElementById("dndcanvas");

var ctx = canvas.getContext('2d');

// Clear the canvas

ctx.clearRect(0, 0, canvas.width, canvas.height);

ctx.fillStyle = 'blue';

// Draw our object in its new position

ctx.fillRect(obj.x, obj.y, obj.w, obj.h);

}

Canvas drag and drop live demo

Drag the rectangle around the canvas! (touch screens only)

We can extend this a bit to handle multiple touches and objects. The principle is the same, but it becomes a little bit trickier to keep track of everything.

Implementing multi-touch canvas drag and drop

We follow the same approach to the single touch drag example above. This time though, we must maintain an array of objects and their positions, and we must also keep track of which touches are currently assigned to which objects.

Finally, our draw function is updated to iterate over our array objects and draw each:

function draw() {

canvas = document.getElementById("dndcanvas");

var ctx = canvas.getContext('2d');

// Clear canvas

ctx.clearRect(0, 0, canvas.width, canvas.height);

// Iterate over object list and draw

for(var i=0;i<obj.length;i++) {

ctx.fillStyle = obj[i].colour;

ctx.fillRect(obj[i].x, obj[i].y, obj[i].w, obj[i].h);

}

}

So, while this might be manageable for very simple applications, it becomes rather difficult quite quickly. Most developers won’t want to be bothering themselves with these details unless they are building a framework, or have very specific requirements.

Enter the framework

In this section we take a quick look at a number of frameworks which support drag and drop. We’ll look at both DOM- and canvas-based frameworks – i.e. their drag and drop implementations use the DOM directly, or work with the canvas element. We’ll start first with the canvas based frameworks. We’ll take a look at two of the most popular ones here. There are plenty more, with differing trade-offs. A comparison chart of the most popular libraries can be found here.

KineticJS

KineticJS is a framework for canvas animation. It abstracts all the pesky details involved in managing the state of objects you have added to your canvas, and provides and interface for applying animations and other operations to the canvas objects. Drag and drop is one such operation supported by KineticJS.

Figure 1: KineticJS logo

Drag and drop with KineticJS

We can illustrate the benefits of using a framework like KineticJS to manage the drag and drop quite easily. Without going into too much detail about KineticJS, we’ll introduce a couple of concepts as we go along.

KineticJS uses the concept of a stage onto which we add layers of shapes and groups of shapes. An example of a possible hierarchy is shown below (reproduced from the KineticJS wiki):

Stage

|

+------+------+

| |

Layer Layer

| |

+-----+-----+ Shape

| |

Group Group

| |

+ +---+---+

| | |

Shape Group Shape

|

+

|

Shape

So, to build a basic drag and drop example with KineticJS, we’ll define our stage, and add to it a layer containing a draggable rectangle. First, the stage.

var stage = new Kinetic.Stage({

container: 'container',

width: window.width,

height: window.height,

});

Next we’ll add a rectangle. KineticJS has a number of built in shapes:

var rect = new Kinetic.Rect({

x: 50,

y: 50,

width: 75,

height: 75,

fill: 'green',

draggable: true

});

Note importantly that we’ve set the draggable property to true. We can add this property at any level in the hierarchy, to make a shape, group of shapes, layer, or even the whole stage draggable.

Now we just need to add the rectangle to the layer, and then the layer to the stage:

// Define new layer var layer = new Kinetic.Layer(); // add the rectangle to the layer layer.add(rect); // add the layer to the stage stage.add(layer);

We can add other shapes to the same or other layers, and they will be draggable too!

And that’s it – certainly a lot easier than having to manage objects and target hit detection ourselves.

KineticJS live demo

KineticJS comes in at around 110KB (compresses to approx 29KB). Whether this is acceptable to include in your mobile webpages will depend on your target devices, and your requirements. However, it is definitely worth noting here that it is possible to build a custom KineticJS bundle by picking and choosing only the features you require. KineticJS also has the advantage that it will work with desktop or touchscreens alike, abstracting the actual events, whether touch-based or mouse-based from the developer.

Update 2015/07/22 This project is parked and is no longer being maintained. The author mentions it is “pretty darn stable”. However, there are 148 open issues. The code is hosted: https://github.com/ericdrowell/KineticJS/.

EaselJS

Another very popular framework is EaselJS. EaselJS also uses the concept of a stage to represent all that happens on the canvas element. Like KineticJS, EaselJS also facilitates hierarchical object structure. Where KineticJS has a layer, EaselJS has a container, and both support nesting of drawable shapes. EaselJS download is approx 81k minified.

To start off with EaselJS, we create a stage on a canvas element:

stage = new createjs.Stage("easelcanvas");

Now let’s try to add a draggable rectangle as with did with KineticJS. We create a shape object, and then we use it to draw the rectangle:

var rect = new createjs.Shape();

rect.graphics.beginFill("red").drawRect(0,0,100,100);

To make this object draggable, with attach the pressmove event, and define what should happen when it is triggered. We simply update the x and y properties of the event target, i.e. our rectangle. Note that we indicate that the stage should be updated after this:

rect.on("pressmove",function(e) {

// Update currentTarget, to which the event listener was attached

e.currentTarget.x = e.stageX;

e.currentTarget.y = e.stageY;

// Redraw stage

stage.update();

}

Finally we can add the rectangle to the stage:

stage.addChild(rect);

As with KineticJS, mouse and touch input are supported seamlessly, taking the burden off the developer. However, there is clearly a little bit more work to do for drag and drop when using EaselJS. And we note again, there’s a lot more the EaselJS than just drag and drop.

This project is still under active development; its most recent release was on 28th May 2015.

jQuery

jQuery, perhaps the most widely used general purpose Javascript framework today, also supports drag and drop of DOM elements, in a number of different guises. However, this is not yet compatible with touchscreen devices without including some other plugin or hack, such as the jQuery UI Touch Punch plugin.

Two interesting jQuery UI interactions we can apply to DOM elements are draggable and sortable.

The following code will make an element draggable:

<style>

#draggable { width: 70px; height: 70px; padding: 0.5em; }

</style>

<script type='text/javascript'>

$(function() {

$( "#draggable" ).draggable();

});

</script>

</head>

<body>

<div id="draggable" class="ui-widget-content">

<p>Drag me around</p>

</div>

As mentioned, this will not work out of the box on touch devices. To get around this, the following code can be used (reproduced from this stackoverflow thread). The idea is to simulate mouse events with touch events, so that mouse-optimised code will be compatible with a touch device. This code needs to be executed after the DOM has loaded:

function touchHandler(event)

{

var touches = event.changedTouches,

first = touches[0],

type = "";

switch(event.type)

{

case "touchstart": type = "mousedown"; break;

case "touchmove": type="mousemove"; break;

case "touchend": type="mouseup"; break;

default: return;

}

var simulatedEvent = document.createEvent("MouseEvent");

simulatedEvent.initMouseEvent(type, true, true, window, 1,

first.screenX, first.screenY,

first.clientX, first.clientY, false,

false, false, false, 0/*left*/, null);

first.target.dispatchEvent(simulatedEvent);

event.preventDefault();

}

function init()

{

document.addEventListener("touchstart", touchHandler, true);

document.addEventListener("touchmove", touchHandler, true);

document.addEventListener("touchend", touchHandler, true);

document.addEventListener("touchcancel", touchHandler, true);

}

An alternative to copying this code into your applications is to include the Touch Punch mobile library instead. It achieves a similar result, by simulating mouse events from touch input. Interestingly, for compatibility purposes, the Pointer Events API does something similar: it fires mouse events after touch events have been fired, so that non-touch enabled devices cen be supported.

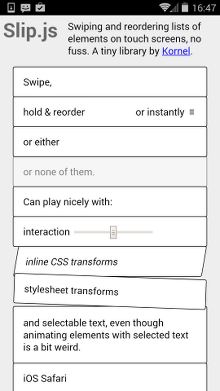

Slip.js

Slip.js is a mobile oriented DOM-based gesture library, which includes a drag to reorder behaviour.

Figure 2: Slip.js demo page

Although Slip.js is designed with touchscreens in mind, it has been implemented to support traditional mouse events too, so it should be fine on desktop and mobile.

Slip.js Example

First we start off with the list that we want to make slippy:

<ul id="slippylist">

<li>Item 1</li>

<li>Item 2</li>

<li>Item 3</li>

</ul>

To now make this a slippy list the list node should be passed as an argument to the slip function:

<script src='slip.js'></script>

<script type='text/javascript'>

var list = document.getElementById('slippylist');

new Slip(list);

</script>

We still have a little work to do however. We must now attach some or all of the events that are offered by the Slip.js library to define exactly how the list will behave. So, we are interested in the slip:reorder event, which will allow us to drag items in a list to reorder them. To make this list reorderable would attach this event as follows:

list.addEventListener('slip:reorder', function(e) {

e.target.parentNode.insertBefore(e.target, e.detail.insertBefore);

}

Slip.js live demo

Drag the list items to reorder:

- Item 1

- Item 2

- Item 3

- Item 4

Hammer.js

Hammer.js is a library that implements a variety of gesture events, including swipe, tap, doubletap, pinch (in and out) and of course, drag. You still need to wire up the events yourself, but there are still benefits to be had over our basic implementation at the start of the article. The full list of supported gestures, events, and event data offered are listed on the Hammer.js github pages.

Hammer.js is a library that implements a variety of gesture events, including swipe, tap, doubletap, pinch (in and out) and of course, drag. You still need to wire up the events yourself, but there are still benefits to be had over our basic implementation at the start of the article. The full list of supported gestures, events, and event data offered are listed on the Hammer.js github pages.

First, set up your draggable element as before

<div id="hammerdrag"></div>

Now, in the parlance of 1980s and ’90s superstar MC Hammer, it’s Hammer Time! We apply the Hammer function to the elements that we want to Hammerise. So:

var element = document.getElementById("hammerdrag");

var hammertime = Hammer(element);

Now we need to define what happens when one of the Hammer events is fired. We’re interested in the drag and touch events here, so:

element.on("drag", function(event) {

// Do something

});

The Do something above is much the same as we’ve had before – we can just update the CSS position of the element with the coordinates of the touch data returned by the Hammer library.

element.on("drag", function(event) {

element.style.left = event.gesture.touches[0].pageX;

element.style.top = event.gesture.touches[0].pageY;

});

So the whole thing doesn’t look too different from our first example. It’s arguably simpler in the multitouch scenario where we are dragging around multiple objects. It’s probably a good idea to point out, that there’s a lot more to Hammer.js than just dragging, and that it surfaces plenty of rich data about each touch, including speed, angle and some other goodies.

Another benefit to this library, and indeed the other DOM libraries, is that we don’t need to worry about different event types from different input devices i.e. the library seamlessly accommodates the input device without the developer having to worry whether the underlying event is a mousemove or a touchmove or a whatever.

Update 2015/07/22: This library appears to be still maintained. Since the article was originally written there has been a version 2 released: http://hammerjs.github.io/.

Conclusion

So that wraps up our coverage of drag and drop. For most requirements, there is probably a library that will suit your needs. Whether you use a canvas- or DOM-based approach depends on your requirements of course, and it’s unlikely you’ll need to choose between a canvas based library and a DOM based library. Rather you’ll be choosing between alternative DOM libraries, such a jQueryMobile and Hammer.js, or between alternative canvas libraries, such as KineticJS and Easel.js.

A useful article covering the usage stats of the various canvas frameworks can be found here.

In the end, which library to use is a matter of matching library features to your requirements, and of course, personal preference.

Resources

- KineticJS

- EaselJS

- Hammer.js

- jQuery UI Draggable

- jQuery UI Sortable

- jQuery Touch Punch plugin

- Slip.js

- HTML5 drag and drop API draft specification

- http://www.quirksmode.org/blog/archives/2009/09/the_html5_drag.html

Dancing MC Hammer gif reproduced from Hammer.js github wiki.

Leave a Reply